While debugging a reasonably complex .NET application, many times you may come across a situation where you want to know which different methods have subscribed to your event. If you are using VS2005 or VS2008 then DebuggerTypeProxyAttribute, a relatively unknown feature of Visual Studio, comes to your rescue. For a long time Visual Studio has allowed developers to customize the information about the variable that is displayed in the IDE during debugging. Before VS2005 the customization was done using mcee_cs.dat (C#), mcee_mc.dat (MC++), and autoexp.dat (C++) files. Starting with VS2005, this customization is taken to next level. VS2005 introduces two new attributes, DebuggerDisplayAttribute and DebuggerTypeProxyAttribute, that allows you to change the information displayed in the Visual Studio debugger. Typically one would apply these attributes to the class in the code which the VS debugger will use to display information. However, there is a trick in which you can use these attributes outside of the original code. This means that you can change the information displayed about ANY class, even the classes that you did not write yourself. VS2005 and above use AutoExp.dll which provides display information about various types to the debugger. The code for AutoExp.dll is provided in AutoExp.cs file with the Visual Studio installation in Common7\Packages\Debugger\Visualizers\Original\ folder. You can add your own code to this file, compile the AutoExp.dll, and place it in either the Common7\Packages\Debugger\Visualizers folder or in the My Documents\Visual Studio 2005 (or Visual Studio 2008)\Visualizers folder. I learned about this trick a while back from John Robbins’ excellent book Debugging .NET 2.0 Applications. It turns out that Visual Studio loads all the valid assemblies from the Visualizers folder, and if the assembly contains Visualizer or any debugger related objects then it will use it during the debugging session. Now back to the topic of this post; to easily display which methods have subscribed to an event I wrote a class called MulticastDelegateDebuggerProxy for MulticastDelegate class. Since, all the events are delegates that are derived from MulticastDelegate class, my MulticastDelegateDebuggerProxy class will be used for all the events. The DebuggerTypeProxyAttribute is used at the assembly level to tell Visual Studio debugger that MulticastDelegateDebuggerProxy class is the proxy for MulticastDelegate class. The code for MulticastDelegateDebuggerProxy is listed below.

using System;

using System.Diagnostics;

using System.Reflection;

using System.Text;

[assembly: DebuggerTypeProxy(typeof(MulticastDelegateDebuggerProxy),

Target = typeof(MulticastDelegate))]

public class MulticastDelegateDebuggerProxy

{

private string[] m_methods;

public MulticastDelegateDebuggerProxy(System.MulticastDelegate del)

{

m_methods = GetMethodList(del);

}

[DebuggerDisplay(@"Subscribers:\{{Methods.Length}}")]

public string[] Methods

{

get { return m_methods; }

}

private string[] GetMethodList(System.MulticastDelegate myEvent)

{

string retType = myEvent.Method.ReturnType.Name;

Delegate[] delegates = myEvent.GetInvocationList();

string[] methods = new string[delegates.Length];

for (int i = 0; i < delegates.Length; ++i)

{

Delegate del = delegates[i];

MethodInfo m = del.Method;

string accessModifier =

m.IsPrivate ? "private" : (m.IsPublic ? "public" : (m.IsFamily ? "protected" : ""));

StringBuilder sb = new StringBuilder();

foreach (ParameterInfo param in m.GetParameters())

{

sb.Append(param.ParameterType.FullName).Append(" ").Append(param.Name).Append(",");

}

if (sb.Length > 0)

{

sb.Remove(sb.Length - 1, 1);

}

string isStatic = m.IsStatic ? "static" : string.Empty;

string isVirtual = m.IsVirtual ?

(((m.Attributes & MethodAttributes.NewSlot) == MethodAttributes.ReuseSlot)

? "override" : "virtual") : string.Empty;

methods[i] = string.Format("{0} {1} {2} {3} {4}.{5}({6})",

accessModifier, isStatic, isVirtual, retType, m.ReflectedType.FullName, m.Name, sb.ToString());

}

return methods;

}

}

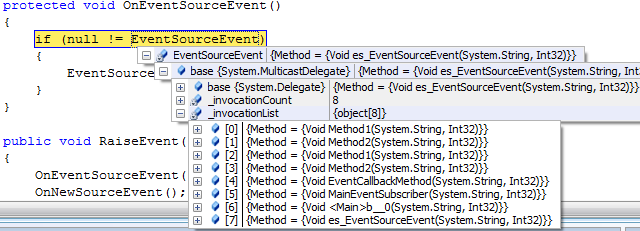

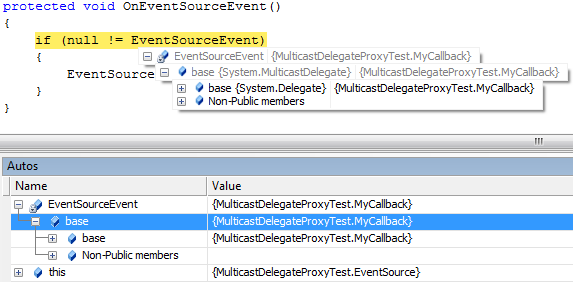

The information displayed in VS2005 by default is shown below.

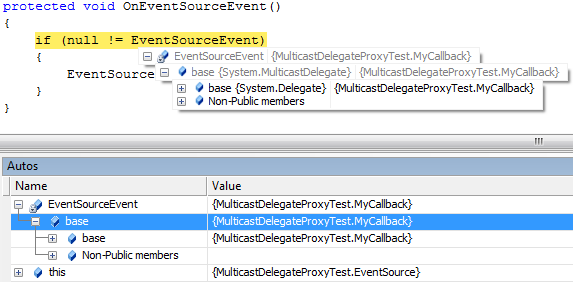

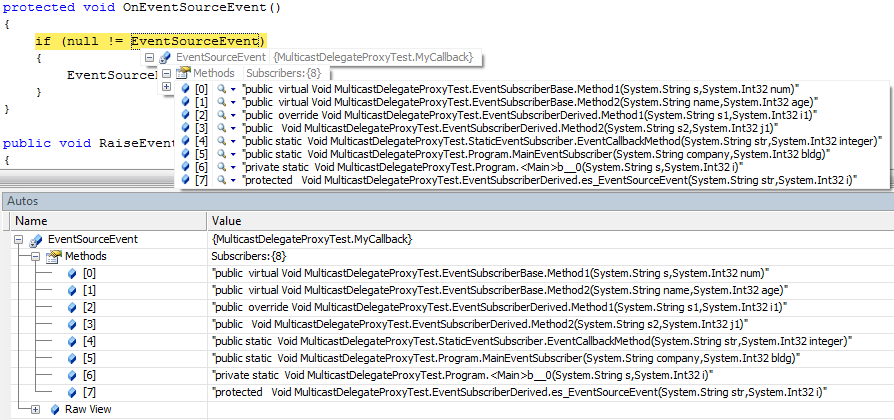

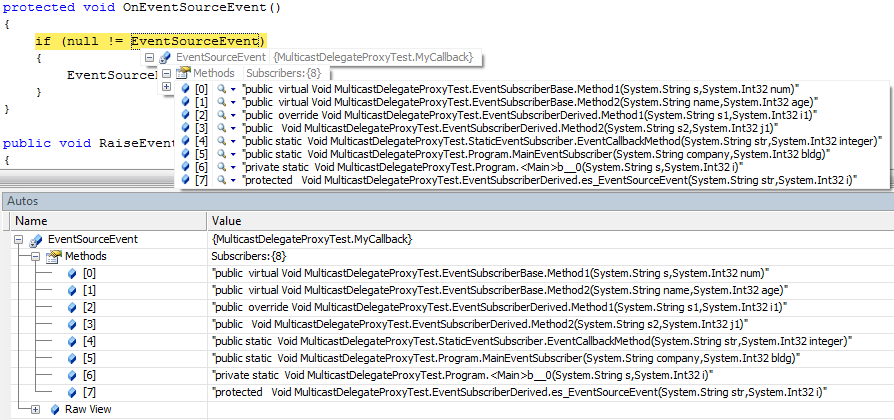

The information displayed in VS2005 with MulticastDelegateDebuggerProxy is shown below. The MulticastDelegateDebuggerProxy displays fully qualified method name and the parameter names used in the method.

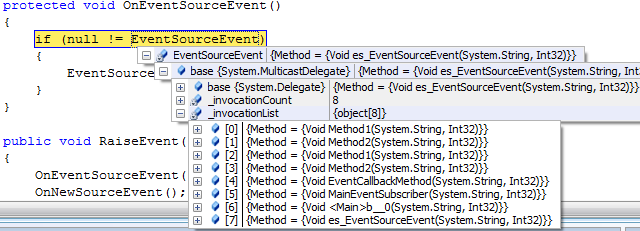

In VS2008, the default information displayed is slightly better than VS2005 as it shows _invocationList by default which can be drilled down to the actual methods. However, the default display can get ambiguous as shown in the screenshot below. The MulticastDelegateDebuggerProxy does a much better job at displaying the same information.